Embodied AI agents operate in both physical forms—like robots and smart devices—and digital avatars within virtual spaces, engaging directly with their surroundings. These agents move beyond static, web-based bots by perceiving environmental cues and taking purposeful actions. Their tangible presence boosts user confidence and supports forms of learning that mimic human patterns. Breakthroughs in large language models and vision-enabled AI now support these agents in devising plans, drawing inferences, and adjusting strategies to meet user requirements. They store relevant context, maintain memory over sessions, and collaborate with humans or request clarification when needed.

At Meta AI, researchers are developing methods for embodied agents—robotic assistants, wearable gadgets, and virtual personas—to sense, learn from, and interact with users in real or simulated worlds. Central to this effort is building a “world model” that merges sensory data, reasoning routines, memory storage, and action planning so agents can interpret space and recognize human intent. Plans for next steps include tighter collaboration features, improved social awareness, and robust protections for user privacy and the risks associated with overly humanlike behavior.

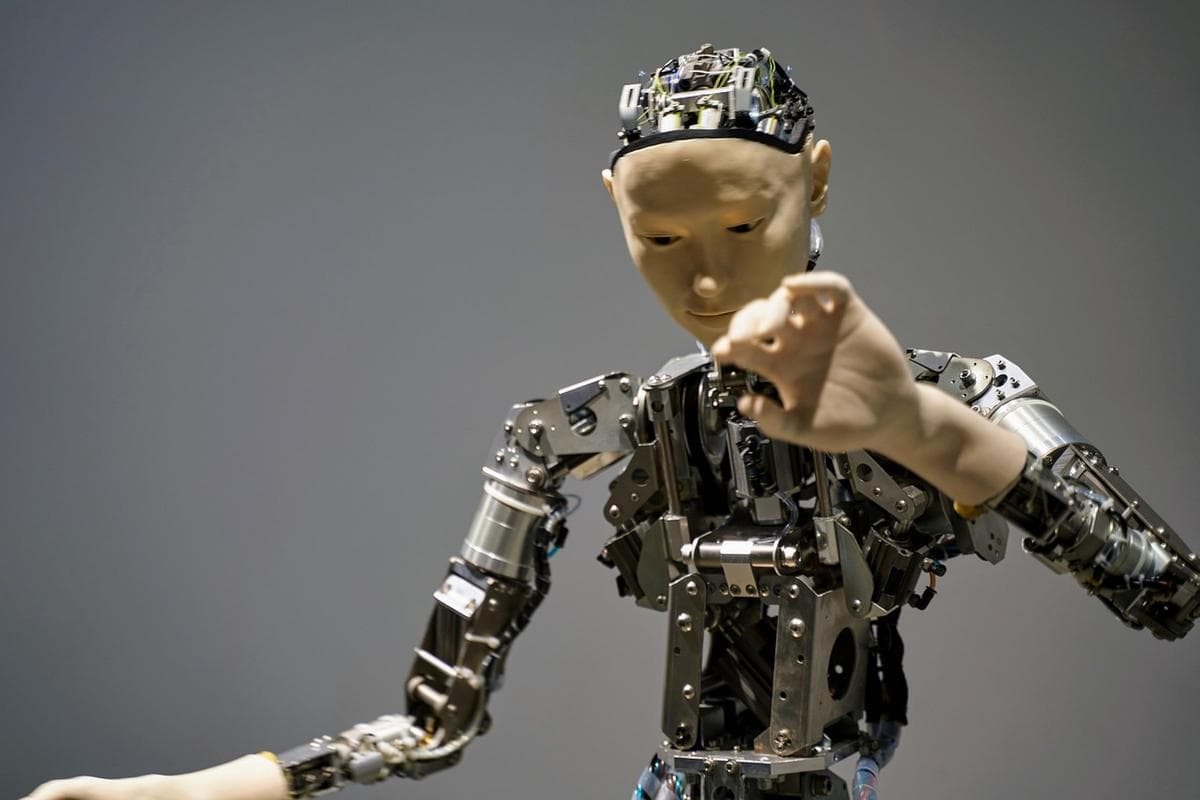

These AI agents generally appear in three categories: virtual, wearable, and robotic, all designed to interact in a manner similar to people. Virtual agents—like therapy-focused chatbots or immersive avatars in shared online spaces—simulate emotional responses to support more genuine exchanges. Wearable versions, embedded in smart glasses or headsets, relay real-time visuals and context-sensitive prompts to help with tasks or boost cognitive performance. Mobile robots navigate physical areas, taking on tough or high-risk duties such as elder care or emergency response. These varied agents not only assist with tasks but also learn from real-world feedback.

World models form the backbone of these capabilities by bringing together inputs from vision, audio, and touch with memory and logical inference. This unified representation allows agents to forecast the impact of their actions, craft effective plans, and adjust behavior as situations evolve. By combining environmental details with an understanding of user goals, world models enable AI systems to carry out complex tasks autonomously and provide more natural, intuitive assistance in a variety of scenarios.

Achieving genuine autonomy requires combining passive data training approaches—such as vision-language alignment—with active, trial-based learning like reinforcement strategies. Passive methods excel at spotting patterns within large data sets but lack grounding in direct action. Meanwhile, hands-on training helps agents develop practical skills yet can be slow and resource-intensive. Hybrid solutions draw on both strengths to help AI form abstract concepts and translate them into goal-directed behavior. Looking forward, coordinating multiple agents introduces demands for seamless communication, clear task distribution, and conflict management. Emerging techniques such as machine-mediated negotiation, shared representation learning, and multi-agent reinforcement will support groups of systems working together.

The study explores how embodied AI—whether in the form of virtual avatars, wearable tools, or autonomous robots—can perceive their context, acquire new abilities, and respond effectively in diverse environments. A well-structured “world model” remains essential, helping these agents interpret context, anticipate outcomes, and chart appropriate actions. Applications are already evident in therapeutic services, interactive media, and on-the-fly operational support. As deployment expands, questions surrounding data security and the ethics of creating systems that emulate human traits demand rigorous scrutiny. Ongoing work will focus on refining learning processes, strengthening cooperative behaviors, and deepening social understanding to enable more thoughtful, responsible interaction between humans and machines.

Complete research details appear in a paper published by the project’s development team.

In July 2025, Moonshot AI released Kimi K2, an open-source Mixture-of-Experts model featuring one trillion total parameters and 32 billion active parameters per token. Industry observers note that its modular expert layers allow for scalable deployment across a wide range of AI-driven tasks.

Researchers emphasize that studying human visual perception from a first-person viewpoint is vital for building intelligent systems that respond authentically to bodily movement. Advances in head-mounted cameras and motion tracking have deepened insights into how orientation and posture shape scene interpretation.

Mistral AI and All Hands AI joined forces to refresh their developer-oriented language models under the Devstral 2507 label. These updates offer finer control over token-level processing and improved customization tools for software engineers.

Teams working to deploy AI agents commercially face a major obstacle: most systems lack persistent memory. Without the ability to recall earlier conversations, agents struggle to develop detailed user profiles that inform future interactions.

Phi-4-mini-Flash-Reasoning, the latest addition to Microsoft’s Phi-4 series, is an open, lightweight model engineered to handle extended-context reasoning while maintaining reliable accuracy. Benchmark results indicate it can manage sequences up to 100,000 tokens without significant performance loss.

AI-driven video synthesis has leaped forward in recent months. Early efforts produced only blurry clips, but now generated footage exhibits remarkable clarity and coherence, opening doors for automated storytelling and commercial-quality content.

This tutorial explores Modin, a drop-in alternative to Pandas that leverages parallel computing to accelerate data analysis workflows across multi-core processors.

Google DeepMind and Google Research introduced two new MedGemma models aimed at expanding open-source development in medical AI. These models focus on diagnostic assistance and personalized treatment suggestions based on patient history and medical imaging.

Perplexity, known for transforming how users search for information with AI, unveiled Comet, an ambitious AI-native solution for advanced search applications.

Salesforce AI Research rolled out GTA1, a graphical user interface agent designed to raise the bar for autonomous human-computer interaction.